Hello Guys,

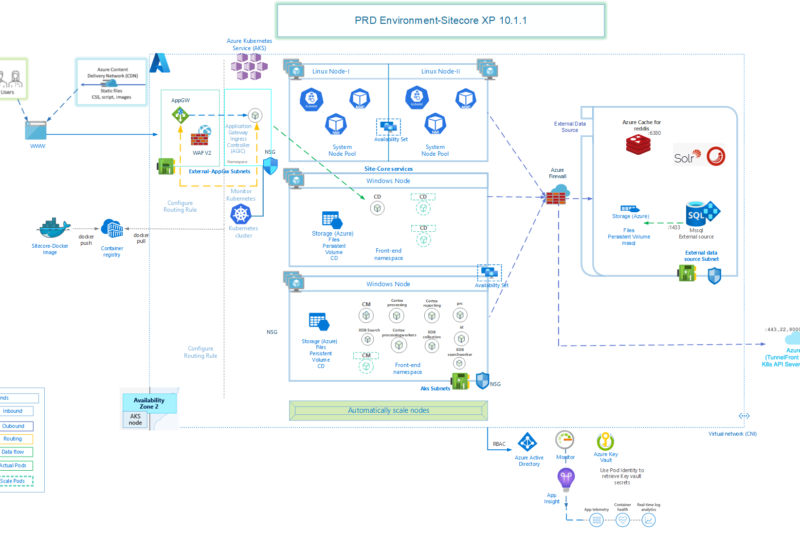

In one of my recent SharePoint 2016 deployment (hosted on Azure VMs), we ran across an issue in which SQL Server 2014 managed backup was enabled for SharePoint databases. The size of all the databases combined was about 2 TB!

The problem started when users started using the SharePoint application extensively, resulting in SQL transaction logs getting filled more frequently and hence SQL Managed backup triggering full backups of such databases during peak business hours multiple times.

Now, whenever the backup used to get started, the communication between SharePoint server and SQL server started to show issues like ping delays, even request timeouts resulting in poor application performance.

Obviously, running backups in peak business hours was the core of the problem, but given the benefits that SQL managed backup comes with, we didn’t want to look for any other backup alternatives.

So, we decided to add another Network Interface Card (NIC) in the SQL servers and thought we could ensure that SharePoint can communicate with SQL using this new NIC (on a different IP) and backups can continue to use existing NIC.

It all went well, we were able to add the additional NIC card easily in both DB servers (2 DBs in always on configuration) following https://docs.microsoft.com/en-us/azure/virtual-machines/windows/multiple-nics

Stopped the VM from Azure portal, basically it should be in deallocated state, created a NIC (it should be in same virtual network and subnet as primary NIC) and ran the following commands:

$VirtualMachine = Get-AzureRmVM -ResourceGroupName "MySPResourceGroup" -Name "SP2016Trial"

Add-AzureRmVMNetworkInterface -VM $VirtualMachine -Id "/subscriptions/05c6c9ab-cfda-4d0c-ac6b-23f39f370ba2/resourceGroups/MySPResourceGroup/providers/Microsoft.Network/networkInterfaces/AKNIC2"

$VirtualMachine.NetworkProfile.NetworkInterfaces

# Set the NIC 0 to be primary

$VirtualMachine.NetworkProfile.NetworkInterfaces[0].Primary = $true

$VirtualMachine.NetworkProfile.NetworkInterfaces[1].Primary = $false

# Update the VM state in Azure

Update-AzureRmVM -ResourceGroupName "MySPResourceGroup" -VM $VirtualMachine

Perfect… well till now 🙂

Started the VM and both NIC were available. Just configured the new NIC with static IP and same gateway as the primary NIC and it was all good, both IPs accessible from SharePoint servers.

Then we went ahead and updated the Azure Load Balancer which was pointing to the SQL Always on listener and changed the backend IPs to point to new NIC IPs and SharePoint application went down!

No matter what we do after that (recreating LB, disabling windows firewall etc. etc.), SharePoint won’t communicate to SQL listener over the load balancer, even though SQL instances were accessible with new IPs directly.

Well, I concluded, it may be because of windows failover cluster had registered the NIC 1 IPs in DNS and won’t resolve to new IP with FQDN. Though, this conclusion has not been verified yet.

After spending 3 days on this topic, and yes we opened MS premier ticket as well and after 2 days of troubleshooting, this is what they had to say

“I will have my Team Lead get with you Monday upon his return. I am not sure why this isn’t working and it ‘should’ be possible so, I will have a more experienced rep call you.”

Well, it was time to move on…

I thought, if we could’t make SharePoint to SQL communication on a different IP, why not try if Managed backup can use that one…

After a lot of head banging, here comes the idea “IP Routing Table” 🙂

The routing table stores information about IP networks and how they can be reached (either directly or indirectly).

So, I ran the command “route print” on SQL Servers, it shows all the existing routes. I saw there was no route specified for the target Azure blob storage that is used by backups. Since it was a SQL managed backup in East US, I simply pinged the Azure blob storage location to find existing IP it is using –

ping anupam2.blob.core.windows.net

Pinging blob.bl5prdstr09a.store.core.windows.net [40.71.240.24] with 32 bytes of data:

Well, I had something to work with 🙂

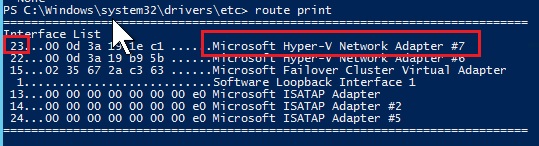

We first need to identify the Network Interface index number. In the output of “route print”, it shows the list of network interfaces on top. First number is the index number we are interested in and right side it shows the name of the NIC card as visible in network adaptor settings window.

Now, we know the destination IP, gateway IP and source network interface index number.

We need Metric, that is a number used to indicate the cost of the route so the best route among possible multiple routes to the same destination can be selected. A common use of the metric is to indicate the number of hops (routers crossed) to the network ID. Well I didn’t get into that detail, I saw many other entries in routing table using 261, so I just used it. Network gurus can use the properly calculated value.

Then I just executed this command:

route ADD 40.71.240.24 MASK 255.255.255.255 10.102.x.xxx METRIC 261 IF 23

Here:

40.71.240.24 is the destination IP

255.255.255.255 is the subnet mask

10.102.x.xxx is the gateway

And in the “route print” output I could see an entry added.

I started the SQL backup to the Azure blob URL and whola, I could see the traffic going over the selected network interface card in task manager 🙂

Of course, we can’t rely on one IP address when using Azure storage, so I downloaded the Azure Datacenter IP Ranges and found out the IPs of East US location.

Yes, there are quite a few, but we can provide the IP range subnet as well in the same route add command, so I just deleted the previous single IP and added the entire range –

route ADD 40.71.240.0/27 MASK 255.255.255.224 10.102.x.xxx METRIC 261 IF 23 Notice that I used a different mask here as when I had added the IP 40.71.240.24 earlier, in the routing table it showed up this mask 🙂 I am sure, network gurus will figure this out too..

Same way we can add other IPs in the routing table, if required.

Changed the Azure Load balancer to use the old NIC 1 IPs and SharePoint application is up again.

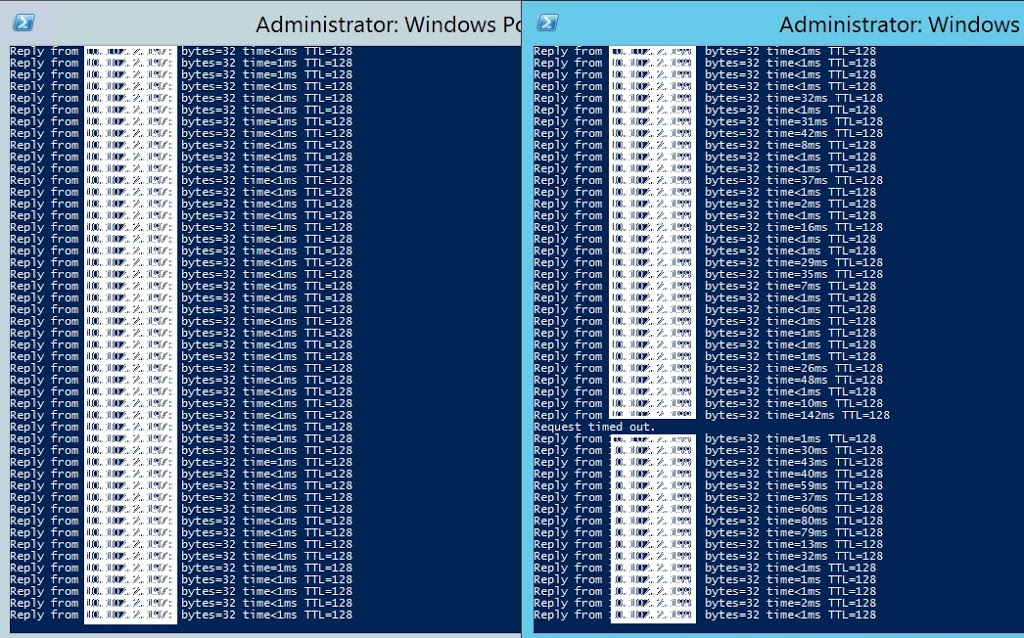

Performance is good even when backups are running now. You can see the difference, first ping is between SharePoint server to SQL server and second is to SQL server IP which is being used by backups. SharePoint communication isn’t impacted even when backups are running and pings are having delays and timeouts on that IP.

Update from Microsoft, they confirmed even though additional NIC uses bandwidth from the first NIC, you get better performance because both NIC have different queues.

So, all good, time to relax and have a nice weekend 🙂

Enjoy,

Anupam